Open source & OpenAI's "big beautiful buildings"

But where, oh where, has the value accretion gone?

Last week researchers at Stanford and the University of Washington published a paper showing how they built a reasoning model that's competitive with proprietary frontier models like OpenAI's o1, and they did it using an open-source LLM and $50 worth of Google Cloud credits. This will wind up having two somewhat counterintuitive effects on AI labs and market leaders like OpenAI: it will add to the pressure to build more – not less – physical infrastructure more quickly, and it will force them to reexamine their relationship with open source – and perhaps even start releasing some of their models for free. Here’s why:

OpenAI has taken a lot of heat over the last few years for the self-evident irony of a for-profit company a) having that name while b) not open-sourcing their models and c) not being particularly open about how their models were trained.

What’s the upside in staying closed? Well, they’ve been first out of the gate on basically every major advancement in AI architecture in the last 5 years – scaling pre-training, distilling large models into smaller models, chain of thought, agents… the list goes on. Staying closed has allowed OpenAI to maintain a consistent 6-12 month lead over the rest of the pack – and at the speed everything moves at now, 6-12 months is practically an eternity.

But if you've been paying attention to AI progress over the last few years you've known for a while that frontier models are rapidly being commoditized. The DeepSeek news cycle in January made this particularly stark, but it was really just another data point in a broader trend; Meta's entire open-source AI strategy was designed to create precisely this kind of downward pricing pressure for frontier models made by competitors. ("So that value accretion moves to the application layer, we love GPT wrappers!" said every VC and Tech Thought Leader last week. Thanks, guys, and welcome to the party.)

That strategy has proven to be very effective for Meta, and it's one of the reasons why the cost of using frontier models continues to fall by an order of magnitude every few months.

It’s also why OpenAI, a software business, has started investing heavily in Things That Are Not Software – like chips, data centers, and energy. In five years frontier models will have very little defensible value on their own; OpenAI’s moat will have to come from the capital-intensive infrastructure they rent out and the distribution channels they own.

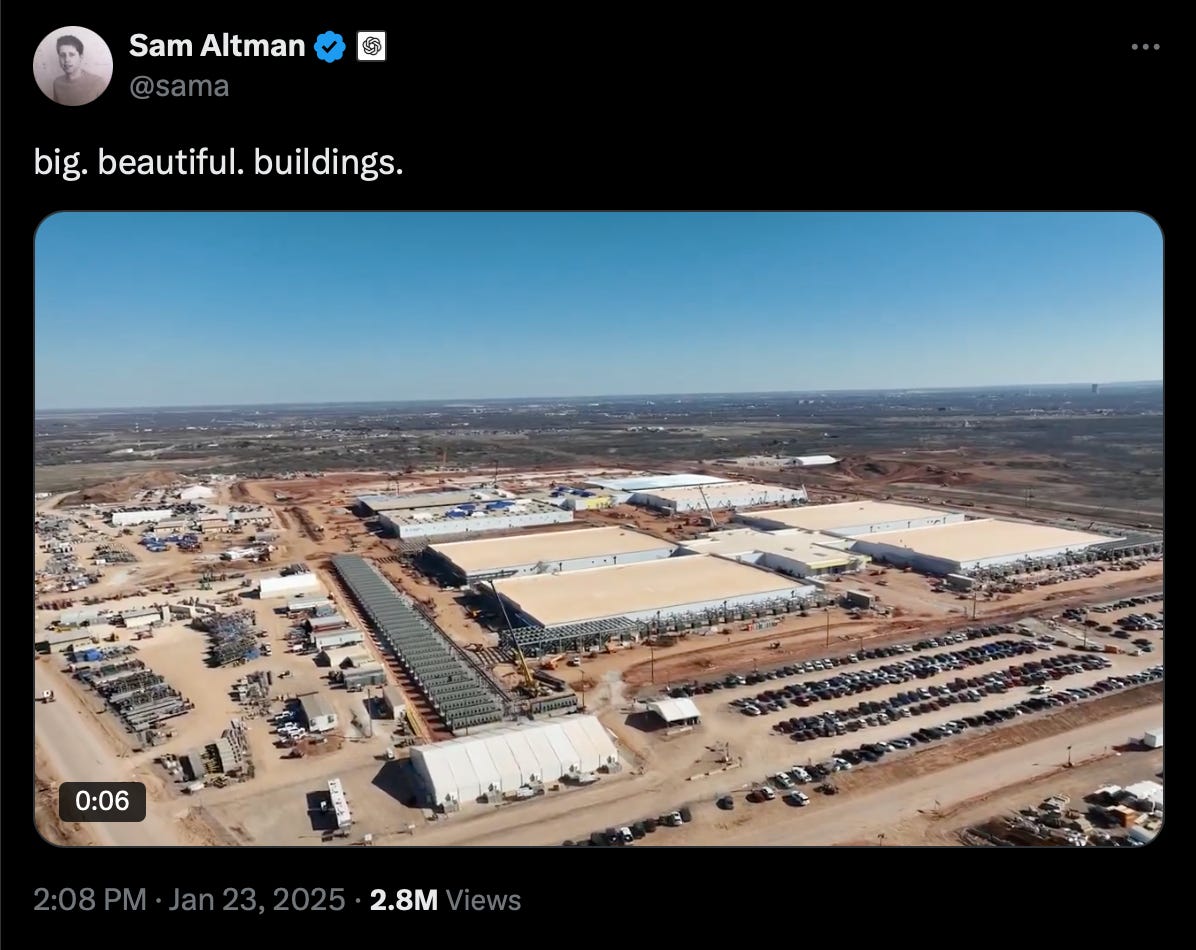

Cue the strange spectacle of Sam Altman doing his best Trump-in-Atlantic-City impression on X:

Weird.

So, what does all of this have to do with the Stanford and UW researchers? Those researchers were able to take an off-the-shelf open-source LLM and turn it into a state-of-the-art reasoning model simply by asking Google’s (proprietary) Gemini reasoning model about a thousand questions.

In English, that means they were able to give their midwit open-source model superpowers by extracting the capabilities of a genius proprietary model.

And they did it in 26 minutes.

Forget DeepSeek’s (highly misleading) $6 million model claim. If proprietary models can effectively have their reasoning capabilities exfiltrated for 50 bucks, then no amount of rate limiting or threatening legalese will stop open-source imitators from flooding the market every time OpenAI pushes out a new release. Staying closed just went from buying them a year-long edge on go-to-market strategy, product development, and marketing to buying them… perhaps a half hour?

That’s hardly a death sentence for OpenAI, and none of that should be taken to mean that they’ve over-invested in infrastructure – quite the opposite. But it does mean they’ll have to become a different kind of company over the next few years – one that manages lots of big. beautiful. buildings. – and they have little to lose and a lot to gain from returning to their open-source roots.

It also means you can forget about AI safety – but we already knew that. When OpenAI released their advanced new Deep Research product last week, they didn’t even bother with the usual redteaming, safety testing, and reporting.

Never mind all that now. It’s time to build.

Just before launching Deep Research, Altman said in a Reddit AMA that he thought OpenAI might possibly have been on the “wrong side of history” on the open source question, but that it wasn’t a priority and that not everyone at OpenAI agreed.

And who knows – maybe he’s had a genuine change of heart and now believes, like this guy, that open source is the best path forward for AI research.

Or maybe he just sees the writing on the wall; within a day of the Deep Research launch, it had already been cloned by researchers in the open-source community.

So don’t be surprised if OpenAI actually starts open-sourcing at least some of their models this year even while they spend SoftBank cash at a pace that would make Adam Neumann blush. And don’t fall for it when they wrap that strategic shift in a metric ton of virtue signaling.

Talk is cheap. Buildings aren’t. As always, it’s all about the value accretion money.